MARCH 2022

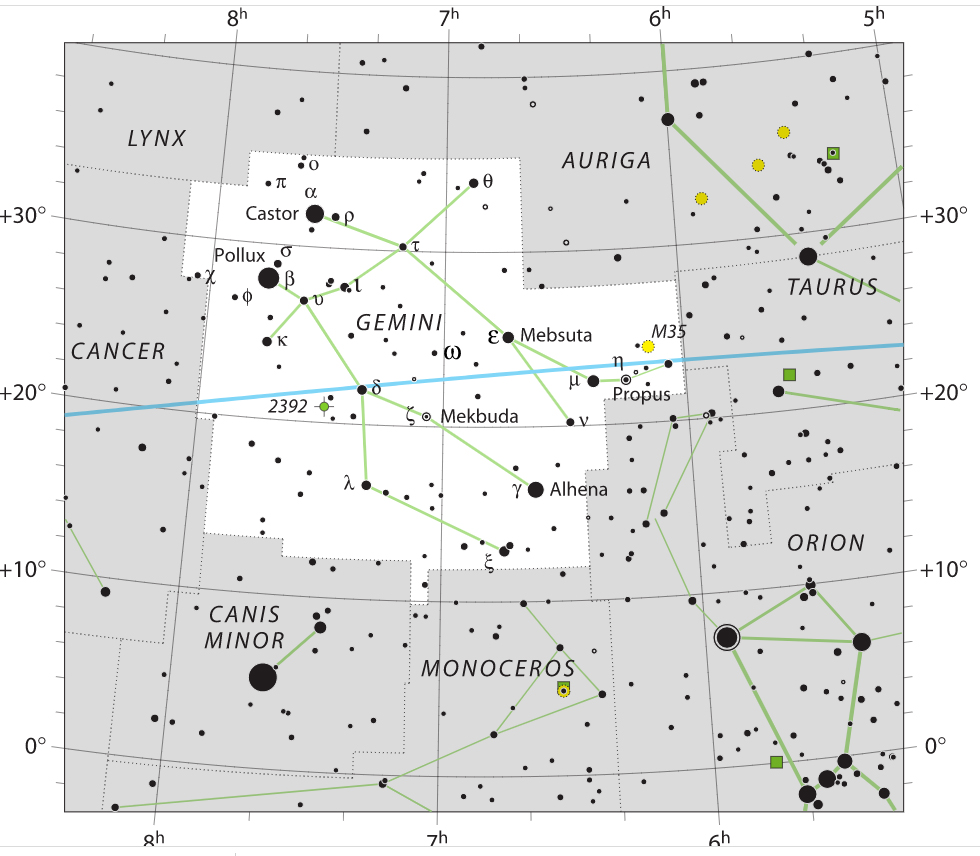

Imaging the Gemini Constellation

[This is just one of many articles in the author’s Astronomy Digest.]

In early March, Gemini is high in the southern sky and so it is an excellent time to take a wide field image framing the constellation. I chose to use a Sony A7S full frame camera. It has only a 12 megapixel sensor but as the pixels, 8.4 microns in size, are quite large it has an excellent low light capability. In fact, its extended ISO range goes up from 102,400 to 409,600! One would never use such as ISO for imaging and, in fact, I used an ISO of 400 for this exercise. However the camera has a most wonderful capability. When imaging in very low light, the live view display ramps up the ISO and so stars become visible. It is thus easy to ‘frame’ the constellation before imaging. A second feature, now common to many cameras, is called focus peaking and, when using this, the stars become red when in focus so the camera is perfect for wide field constellation imaging. [As I write, a ‘Like New’ A7S can be obtained from MPB for ~£654 (as mine was) with ‘Good’ ones for ~£500.]

One then has to decide on the choice of lens so that theconstellation will be nicely covered. The URL below links to a camera field of viewcalculator. My best prime lens – usedwith an adapter on the Sony A7S – is a Zeiss 45 mm Planer. This superb lens was designed for use withthe Contax G film camera brought out in 1994 and is regarded as one of thehighest resolving prime lenses ever produced.

Using a camera Fieldof View calculator:

https://www.scantips.com/lights/fieldofview.html#top

gave a field of view of 43.6 x 29 degrees. This would nicely encompass theconstellation.

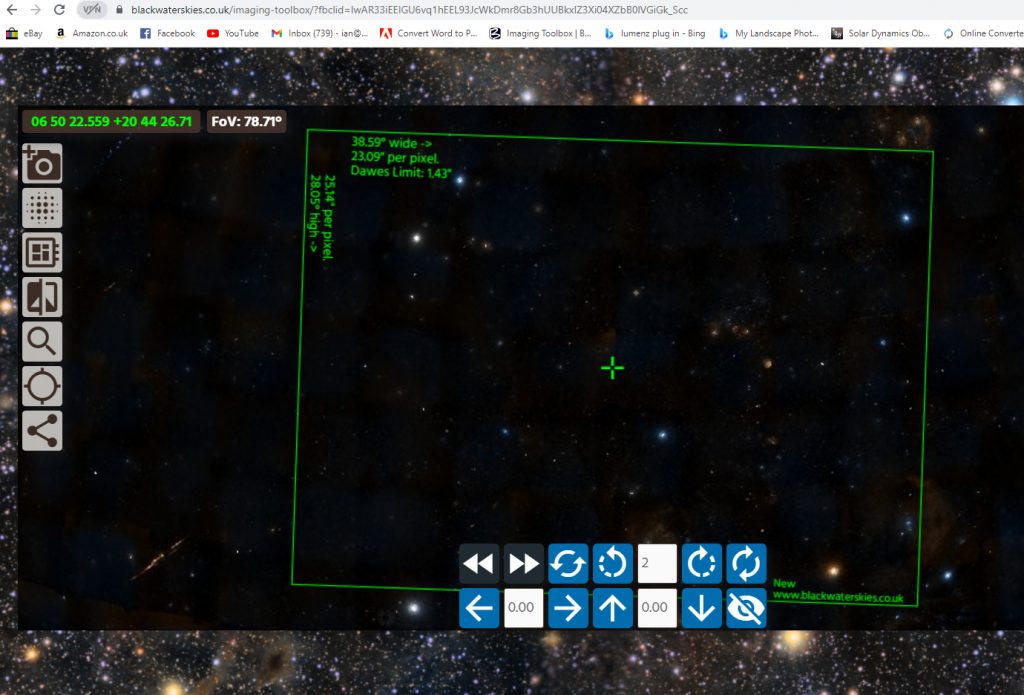

There is a very slight problem: the ‘Blackwater Skies’ fieldof view calculator gives a field size of 38.6 x 25.1 degrees so does not quiteagree.

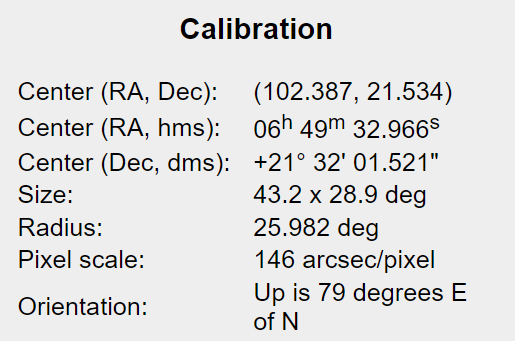

But there is an arbitrator! As described below, the field of view was submitted to Astrometry.net to be plate solved and this was the result – Blackwater Skies is out by a little but not enough to be a real problem.